Inspired by the rapid advancements in generative AI, we asked ourselves, “In what ways can we use WebAssembly (Wasm) to enhance the developer experience?” To answer this question, we will explore how to build an application, called Chainsocket, that allows developers to chain together “plug-ins” written in a variety of languages, each capable of harnessing the power of compute, AI, and the web.

Note: This project is a proof of concept born out of a recent Dylibso company-wide hackathon and is intended for inspirational purposes. Please use it responsibly!

Goals

- Enable the creation of plug-ins, using a variety of source languages, that provide granular business logic such as running input against generative AI models (via REST API like ChatGPT/Claude or built-in like Llama2.c), performing a specific computational task, or utilizing the former to complete a sequence of tasks.

- Provide data schemas and a mechanism for plug-ins to perform tasks for one another

- Empower users to build apps using a simple configuration file

- Apps should be able to run across diverse environments (e.g., browser-JS, Rust server, Edge) while minimizing, or eliminating, the need for re-implementation

- Host environments should be able to execute the arbitrary code encapsulated in the plug-ins without sacrificing their own security.

- Create the potential for extensive plug-in ecosystem development and distribution.

Why WebAssembly?

WebAssembly presents an optimal solution for realizing the aforementioned goals. For one, a plethora of programming languages currently compile to Wasm with even more on the horizon. This aligns perfectly with our goal to offer diverse language support for Chainsocket plug-ins. Moreover, the capability to write WebAssembly modules once and deploy them across different host systems addresses the need for application portability, and WebAssembly’s secure code execution and memory isolation provide an ideal foundation for cultivating a safe ecosystem of plug-in development and distribution.

We will be using the WebAssembly framework, Extism to take advantage of these features.

What is Extism?

Extism is a framework that provides a powerful, developer friendly abstraction layer over raw WebAssembly runtimes (e.g. Wasmtime, Wasmer, V8, etc.) We use it here to power through a number of low-level obstacles that would confront us if we were to use the runtimes directly. Here are a few examples:

-

It can be challenging to directly embed Wasm runtimes into a wide variety of source languages, which we want in this case in order to make our AI apps highly portable. Extism simplifies this process by providing out-of-the-box support for 17 host languages through SDKs with idiomatic interfaces.

-

The core Wasm specification only provides for basic primitive types such as integers and floats, with no ability to pass Strings or more complex data structures around. This is a problem for our use case as it’s heavily based on parsing and manipulating Strings due to the large language models involved. While we can solve this limitation by passing around pointers and directly managing memory (ie. implementing our own Application Binary Interface), that’s a lot of work. Extism can handle this for us, while we focus on the core application.

-

By default, Wasm only provides compute capabilities within a tightly controlled sandbox that lacks direct access to the Host system (e.g. writing to a log file, making networking calls). Any other capabilities must be explicitly granted by the Host application. Extism provides constructs to simplify the orchestration of this approach to capability-based security.

Design and Implementation

There are three main components involved in the design of Chainsocket: An application configuration file, a host application, and the plug-ins. In short, the plug-ins provide the bulk of the business logic, the host application orchestrates the interaction between plug-ins, and the configuration file provides users with the ability to combine and customize (via settings) the plug-ins in interesting ways to create AI-powered applications.

The Plug-ins

Drawing upon concepts seen in LangChain, Chainsocket features three types of plug-ins:

- Large Language Model (LLM): Takes a string as input and feeds it into a generative AI model, either through a networking call, or serviced with its own local model.

- Tool: Performs specialized services that augment the capabilities of LLMs, such as retrieving data from a vector database (e.g. Pinecone), making calls to a web service (e.g. Google search), data transformation, mathematical calculations, and so on.

- Agent: Uses one or more generative AI models as its primary means of computation, leveraging Tools and LLMs as needed to carry out tasks. Can be very basic such as simply passing input to an LLM, and returning the output, or something more complex that makes multiple calls to one or more LLMs and / or Tools, and applying any other business logic needed. The ReAct Agent in LangChain is an apt example.

The existing Chainsocket plugins were exclusively developed in Rust, but they have the potential to be created in any language compatible with WebAssembly. This versatility allows us to efficiently incorporate a diverse range of languages, significantly enhancing the creation of plug-ins and enabling the establishment of an extensive library of reusable components.

We have utilized Extism Plug-in Development Kits (PDKs) to elevate the developer experience in conjunction with core WebAssembly. One noteworthy feature is the provision of a configuration mechanism that effortlessly transmits a variety of settings (such as prompts, API keys, plugin names, etc.) from the Chainsocket host to the plugins. Consequently, App creators gain increased flexibility to tailor their applications via the configuration file (detailed below). Additionally, given the potential for interactive communication between plugins to tackle tasks (e.g., Agents invoking LLMs or utilizing Tools), the accessibility of log information becomes pivotal for tracking logic flow and addressing issues. To address this, we’ve effectively employed Extism’s logging function to extract this information from the Wasm modules.

App configuration

The configuration file serves as the primary mechanism for creating a distinct AI application. Within this file, users can define various Tools, LLMs, and Agents through attributes:

- A

name, which is the unique identifier for the plug-in. This is used by the Host Application to route requests between plug-ins - A

descriptionproviding a summary of the plug-in’s capabilities - A

plugin_name, which specifies the path to the wasm module providing the implementation.

In the case of an Agent, some additional attributes are available:

- A

promptwhich the Agent will use as the system prompt when it calls its configured LLM - A list of LLMs and Tools, which are the unique names of the corresponding plug-ins that the Agent will use to carry out tasks

The Agent also serves as the entry point into the application, so the Chainsocket hosts will route all input received from the user of the application directly to the configured Agent to carry out the rest of the workflow.

Host Application

The Host application is implemented in Python using the Extism Python Host SDK. Its primary tasks are to:

- Load up the configuration file and instantiate the plug-ins, passing along their associated settings.

- Route requests between the plug-ins (e.g. Agent calling an LLM or Tool, etc.) The plug-ins communicate via a JSON API, with a specific schema used for each plug-in type. For instance, an Agent plug-in must format its request to an LLM plug-in using the LLMReq schema.

- Provide an interface between the user and the rest of the application. In this case, a simple loop that collects input from the command line and forwards it to the configured Agent.

- Provide additional system capabilities to the plug-ins (e.g. File I/O, HTTP requests, etc.)

- Allow components to retain “memory” and update their knowledge over time

The business logic in the Host Application is kept intentionally lightweight in order to maximize the portability of the app, with the configuration file and the platform-independent Wasm modules providing the bulk of the functionality. This would enable us to create, for instance, a JavaScript Host App, based on the Extism Browser SDK and leverage the same app config file and set of plug-ins, perhaps with a slick user interface to generate the config file if desired.

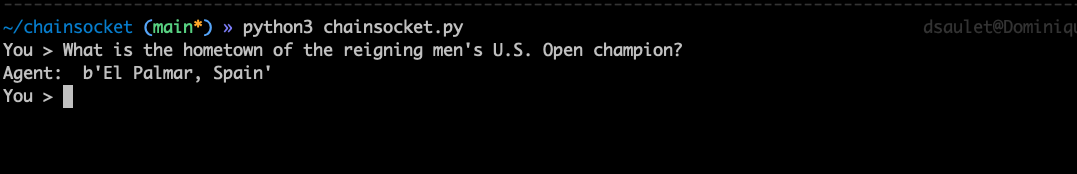

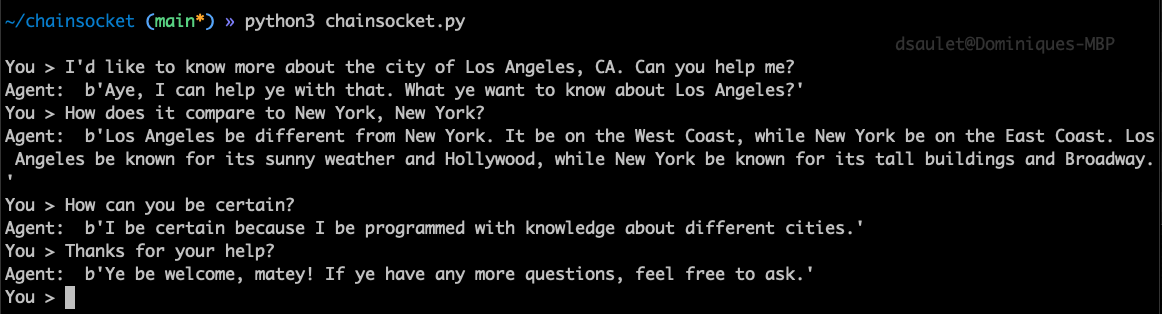

Demo Application

For demonstration purposes, let’s look at an example set of plug-ins that feature an LLM, a Tool, and two Agents that provide two distinct flavors of a chatbot style application.

-

First up we have an LLM plug-in that makes calls to ChatGPT via the OpenAI REST API. For a more detailed description of how this works, take a look at this post here.

-

Next we have a Tool plug-in that performs a Google Search via SerpAPI. It Leverages the HTTP Request Host Function provided by Extism to gain access to the networking capabilities of the host system, with the host application providing access to the domain in the configuration. It also provides some parsing logic to cleanly extract the search results.

Two different types of Agent are provided:

- A “Self-ask with Search Agent”, which is almost entirely based on the research and proof of concept found here. It performs a series of requests to the two plug-ins above and implements parsing logic in order to break down a complex query into smaller ones, leveraging Google searches to obtain more recent information. The code for this is an almost direct translation to Rust from the original Python, with ChatGPT doing the bulk of the conversion.

- Note: this Agent uses a built-in four-shot prompt that is sent to the LLM plug-in as a key component of its business logic.

- A Conversational Agent. We’re able to give it a “personality” through the prompt attribute in the configuration file, and it keeps a running history of the conversation with its human counterpart using Extism’s advanced memory feature, giving modules additional “variables” which can be shared between functions in the same module instance. It sends this ever increasing history on each call to the LLM plug-in as a system prompt in order to create the chatbot experience.

Future Considerations and Alternative Paths

- Load plug-ins from a centralized or federated registry instead of from the file system.

- Extract additional observability data (traces and metrics) from the plug-ins to help with performance optimization and debugging. Grabbing telemetry data from Wasm is currently a challenge, but tools such as this are making it much easier.

- More robust validation of the configuration file

- Add some automated validation to ensure plugins are compatible with a Chainsocket host (e.g. all plug-ins must export a “call” function) Tools such as Modsurfer can help with this.

- And of course, the creation of many more Agents, Tools, and Generative AI models as plug-ins that can be mixed, matched, and distributed as WebAssembly modules to create all sorts applications

And that’s all folks!

- Dominique Saulet, Director of TPM

Whether you're curious about WebAssembly or already putting it into production, we've got plenty more to share.

We are here to help, so click & let us know:

Get in touch